Waterbears ARA module

I worked with creative agency Midnight Commercial, leading the development of a unique, novel Google Ara module that combined state-of-the-art optics technology, custom microbiology, and a deeply critical approach to everyday technology.

The deliverable:

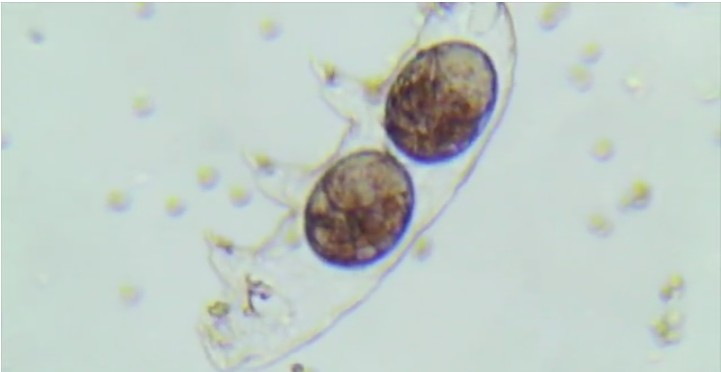

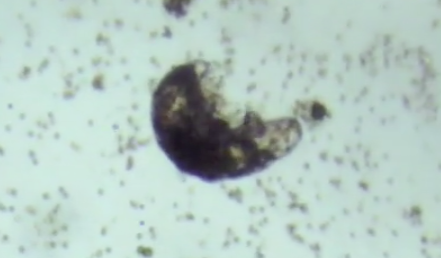

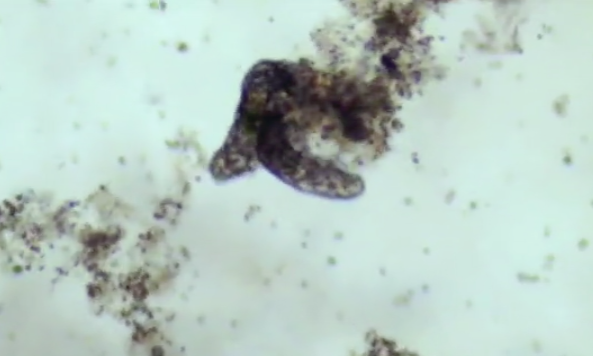

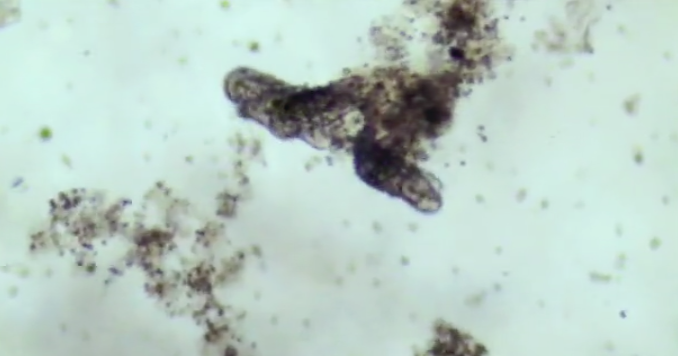

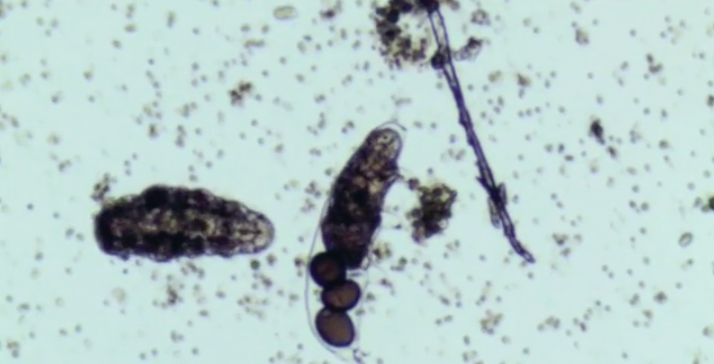

A tiny, fully-manufacturable Ara module centered around a tiny micro-biome, perfectly populated with a mix of algae, rotifers, ostracods, and yes, “water bears.”

Project Overview

I was brought on as Product Manager and Hardware Lead shortly after Midnight Commercial had successfully pitched the concept, and needed to quickly spin up a small team devoted to the task. Unquestionably, the best part of this project was working with a team of incredibly talented people, including Matthew Borgatti, Rebecca Lieberman, Vinyata Pany, Sam Posner, Jesse Gonzalez, Jennifer Bernstein, David Nuñez and Cody Daniel (with, of course, excellent support and guidance from our counterparts at Google).

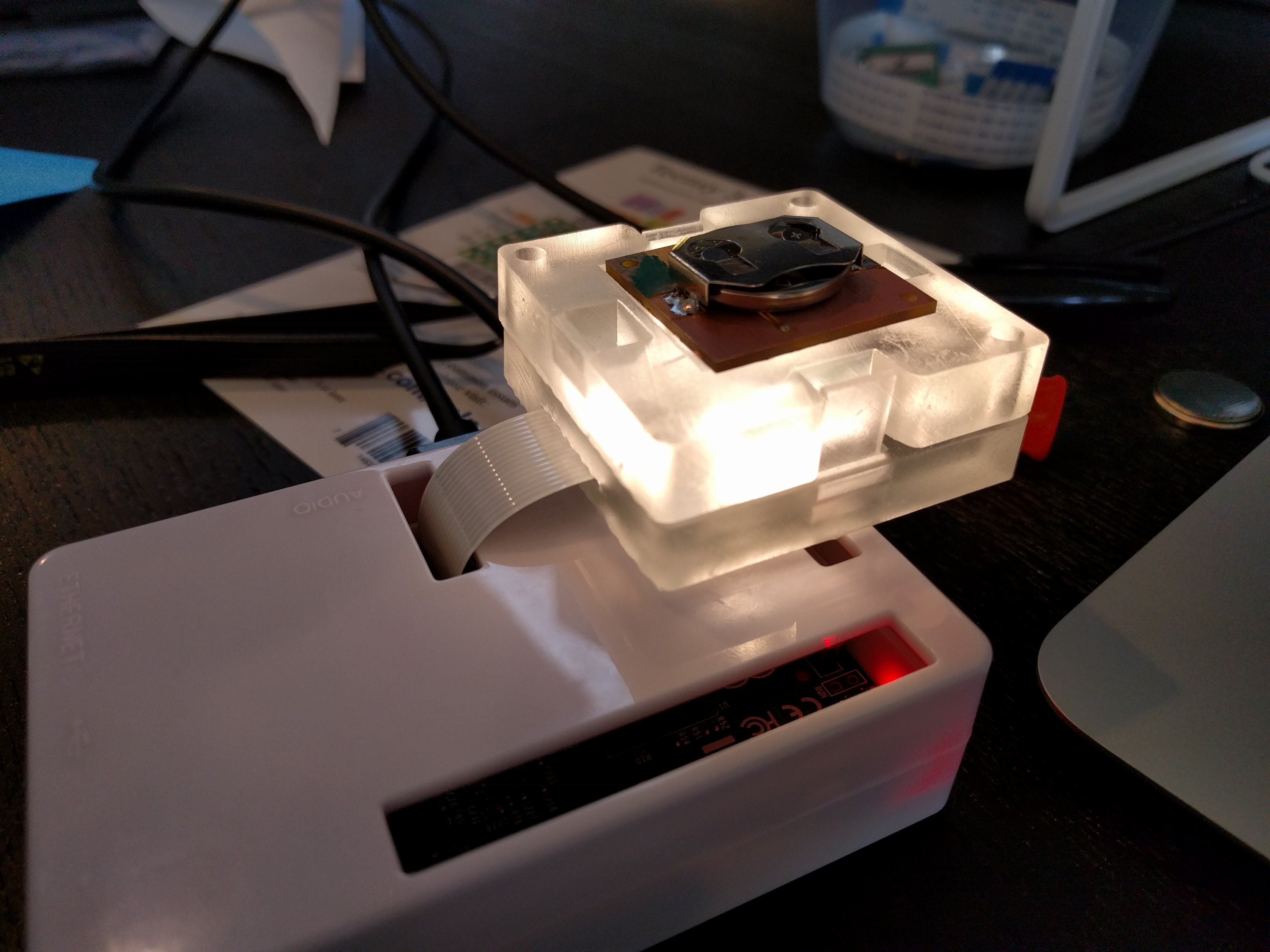

The module would contain an imaging sensor, illumination system, multi-point thermal sensing, and of course, a tiny optically-clear microbiome mated to a hybrid physical/computational imaging stack.

Hardware Integration

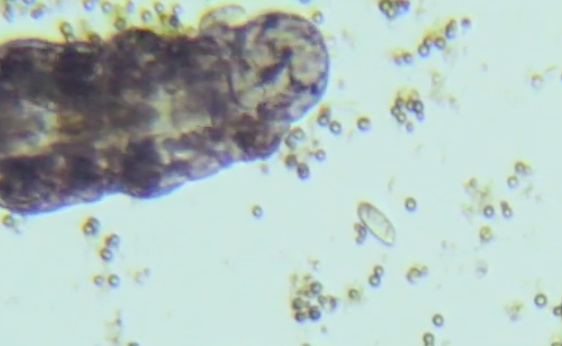

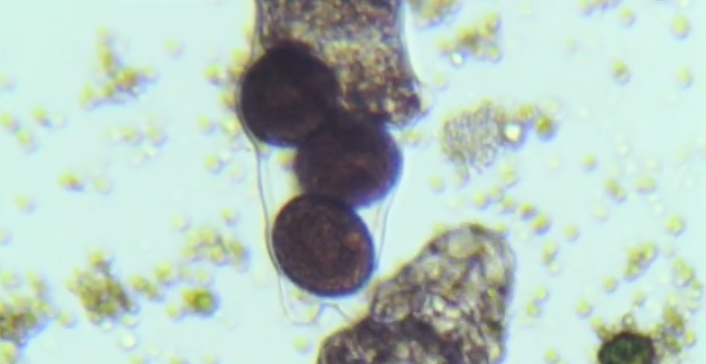

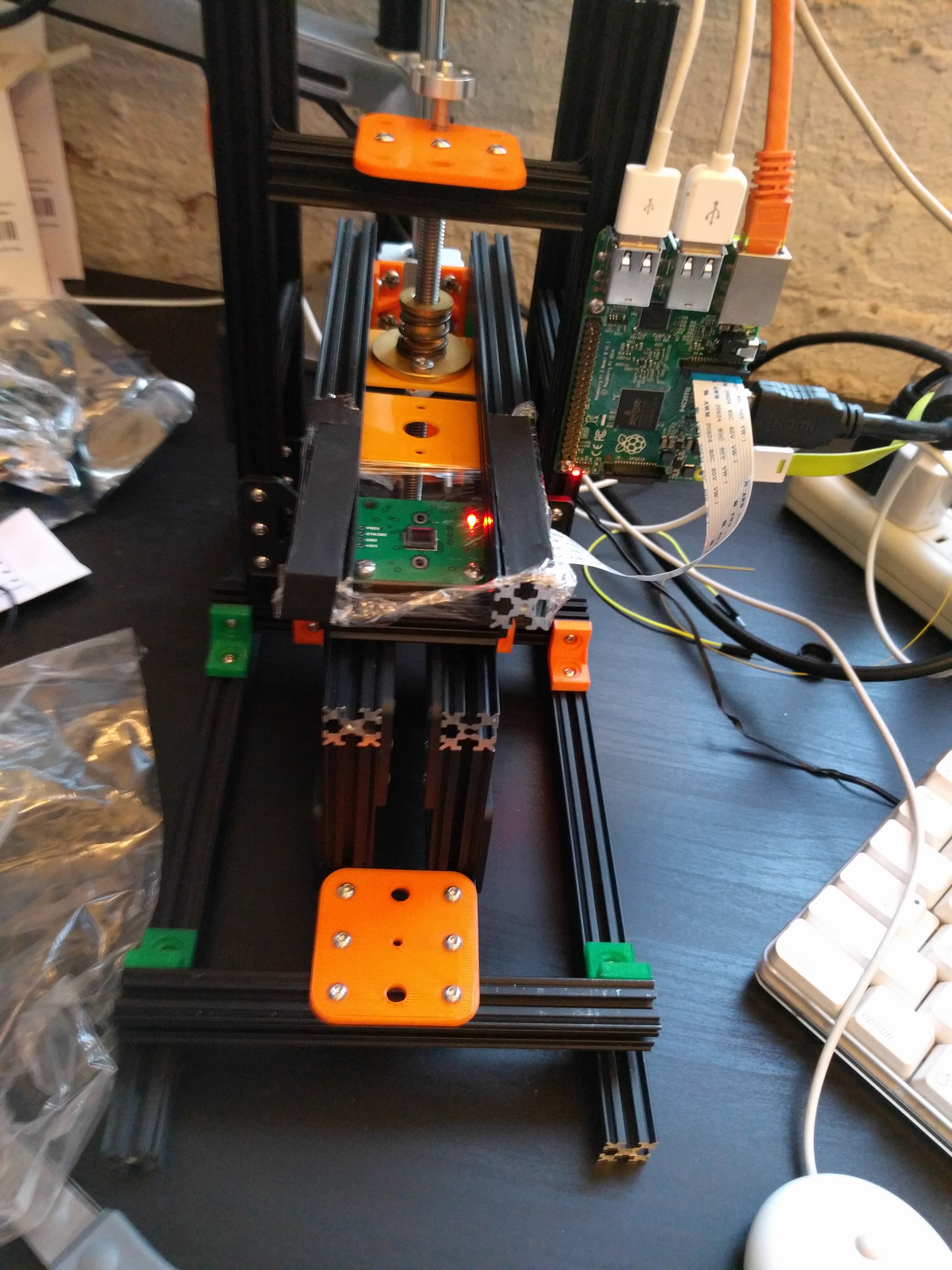

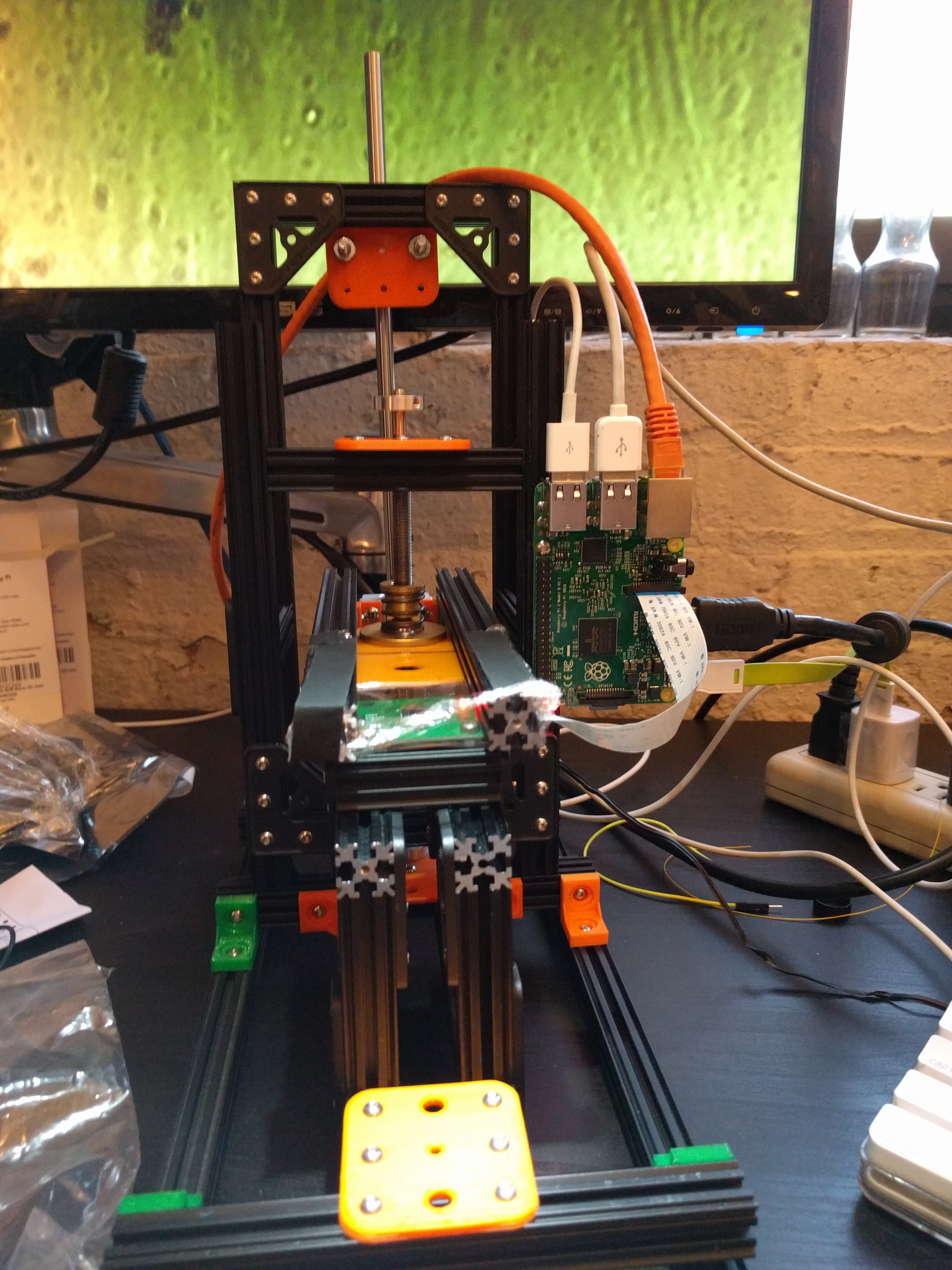

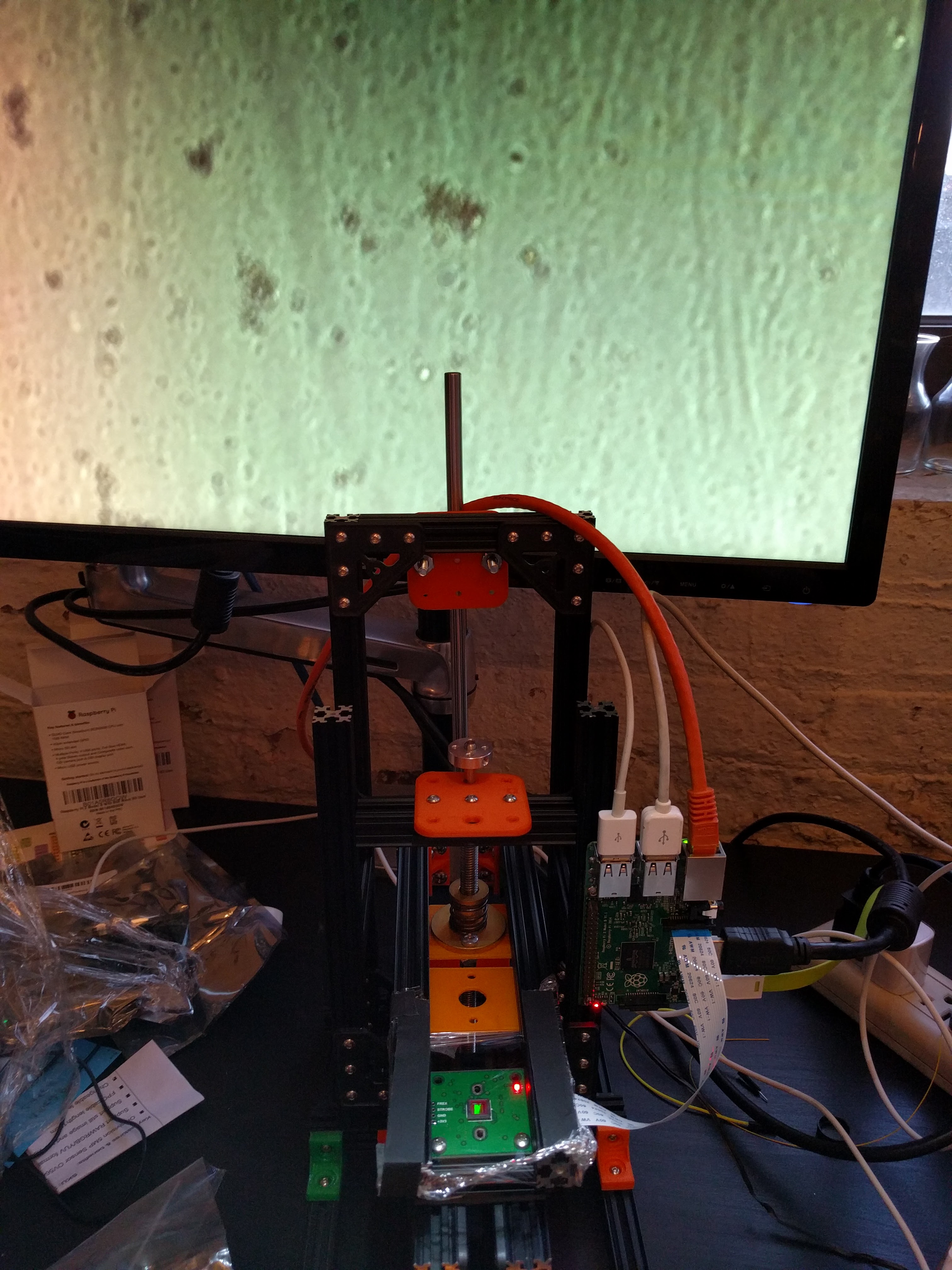

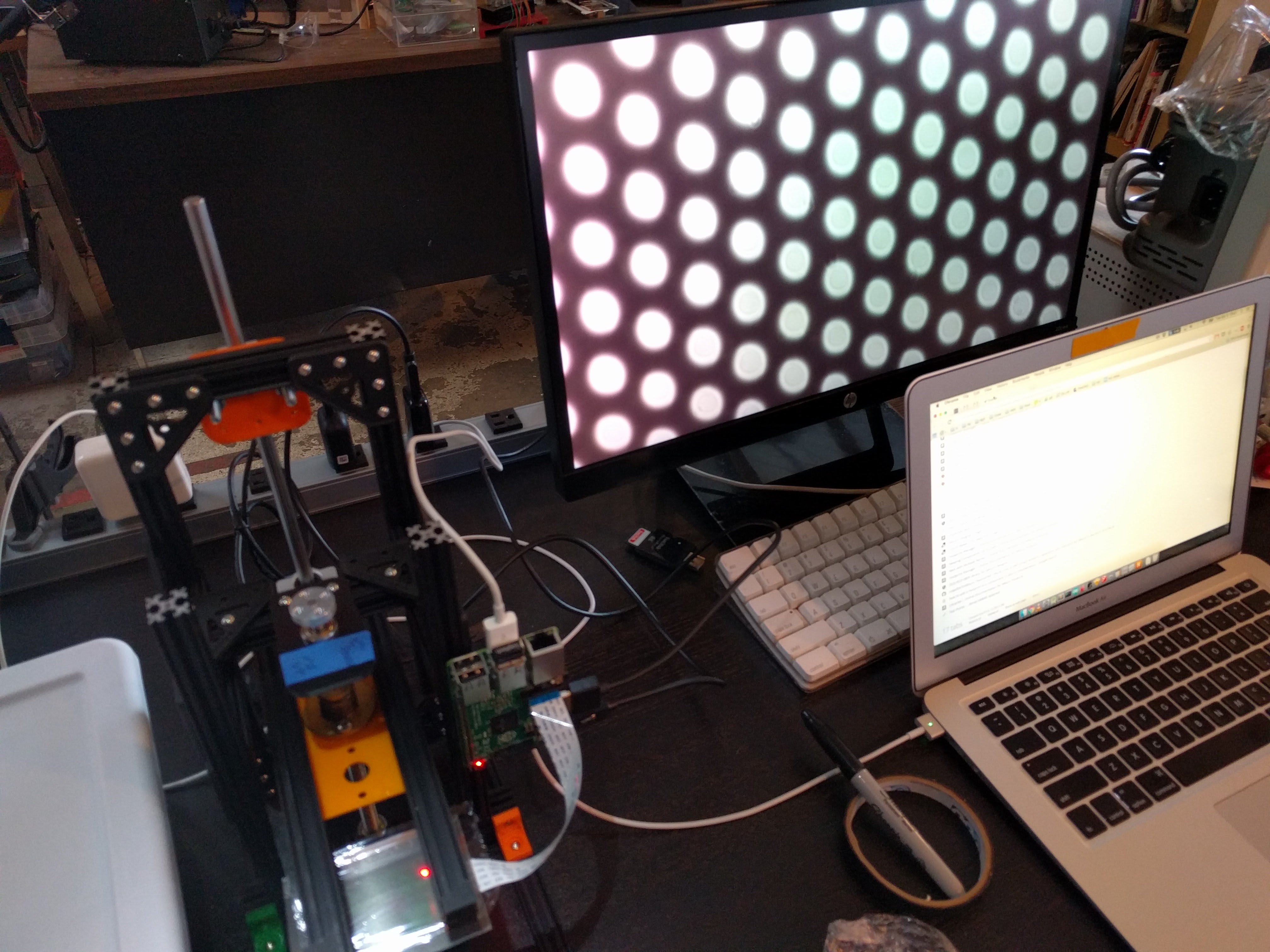

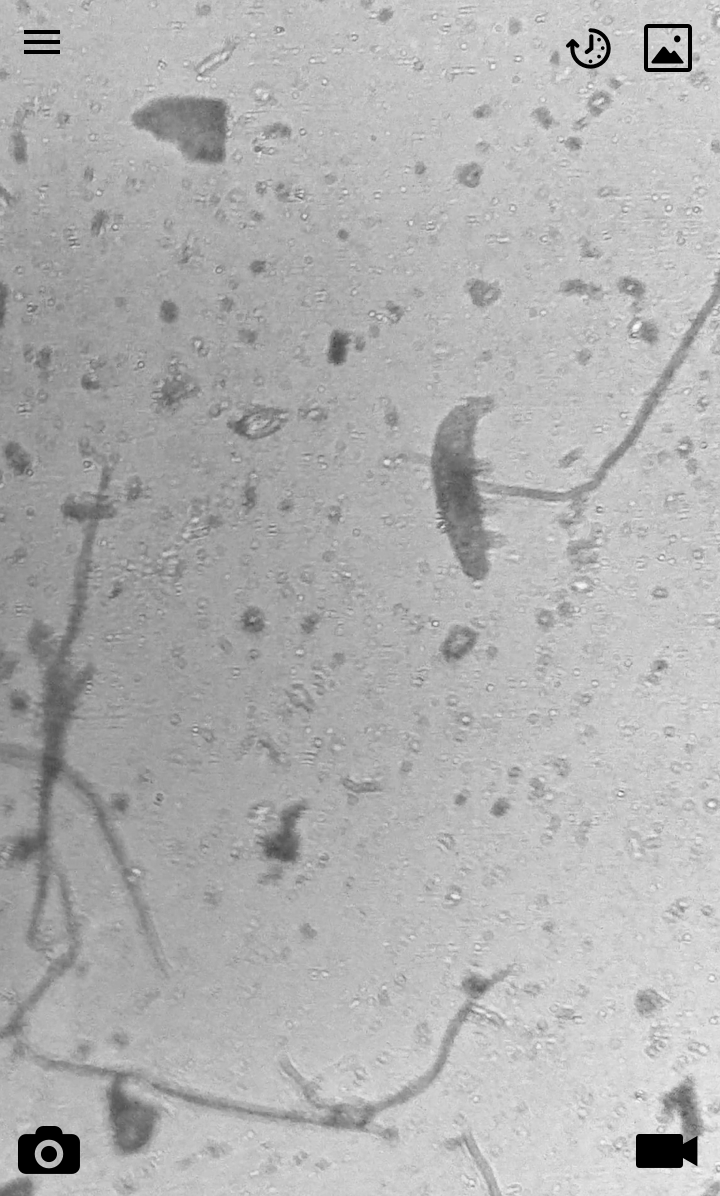

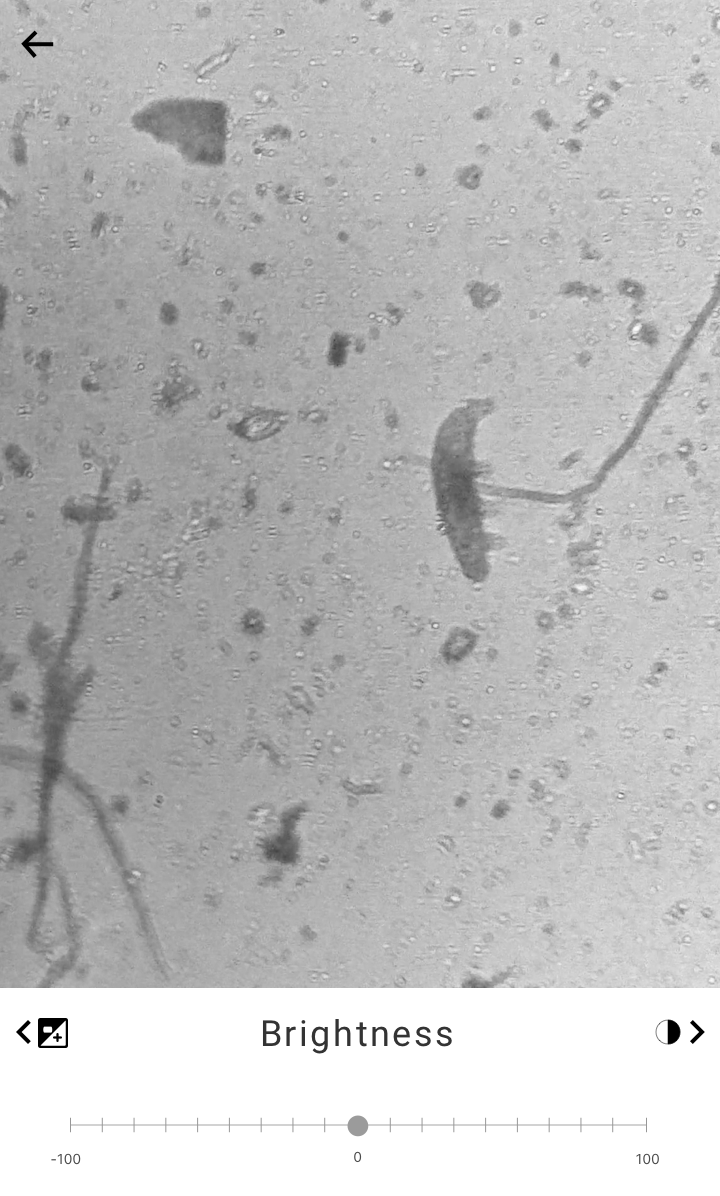

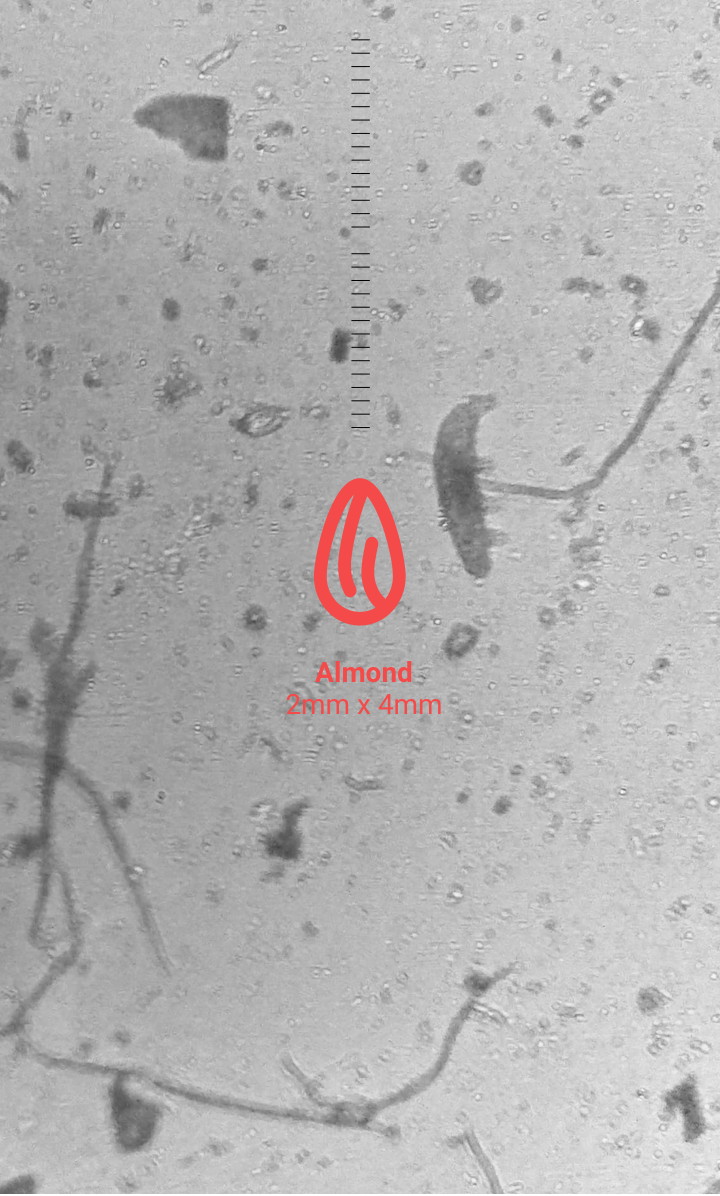

Imaging sensors are now densely-pixelled enough to function without secondary optics - lensless. Our first tardigrade imaging tests were performed with a ~12µm plastic membrane stretched taut over a carefully de-lensed imaging sensor. To our delight, the image quality from this admittedly-brutal first proof-of-concept was enchanting: not perfect, by any means, but certainly promising. To do the first round of imaging tests, we used a rig I built out of Openbeam and some custom-printed fittings—this allowed us to repeatably and precisely position the sample containers over the bare imager. Our lead mechanical engineer (among other roles), Matt Borgatti, designed subsequent optical and illumination testing rigs, each parametrically designed to test collimation, diffusion, dissipation, and longevity in the systems.

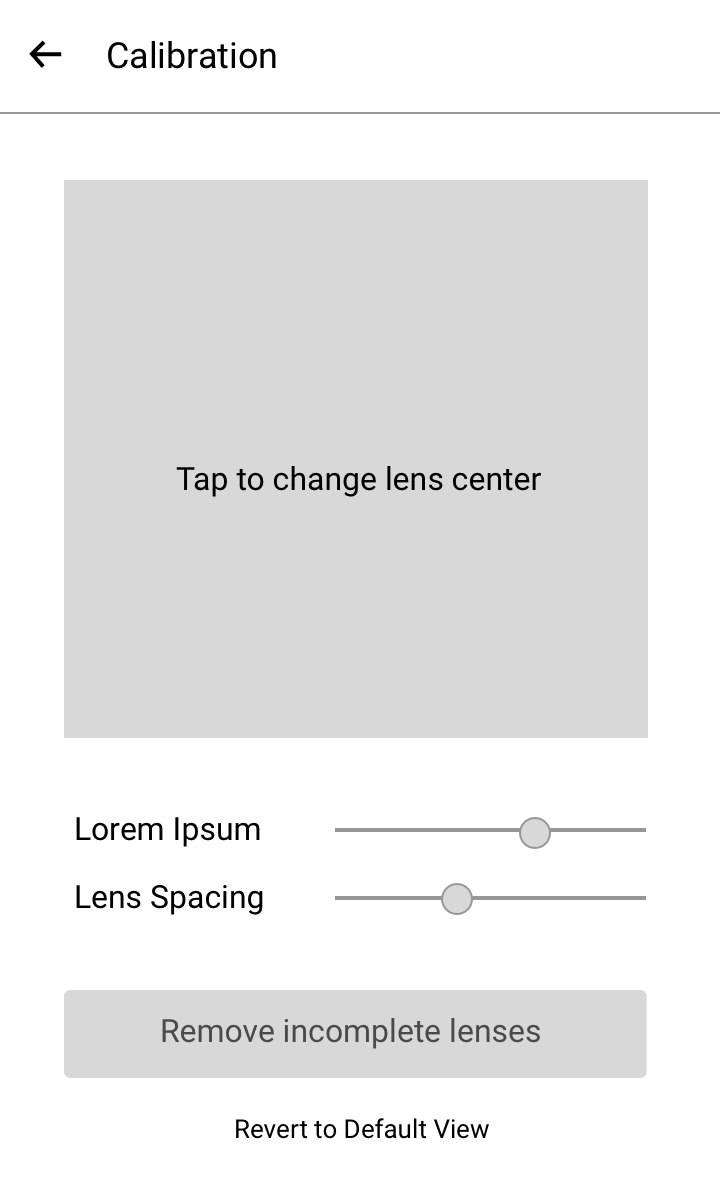

Our optics research track was focused on quickly getting to the state of the art in contact microscopy, microlens array imaging, and computational image reconstruction. Our goal was to get the best-looking image with the shortest (ie, flattest, so it wouldn’t bulge out of the back of the phone) possible imaging stack that could quickly and reliably be manufactured. This was, in itself, a fascinating and challenging project, far too cool to summarize in a paragraph, so I’ll leave it at that.

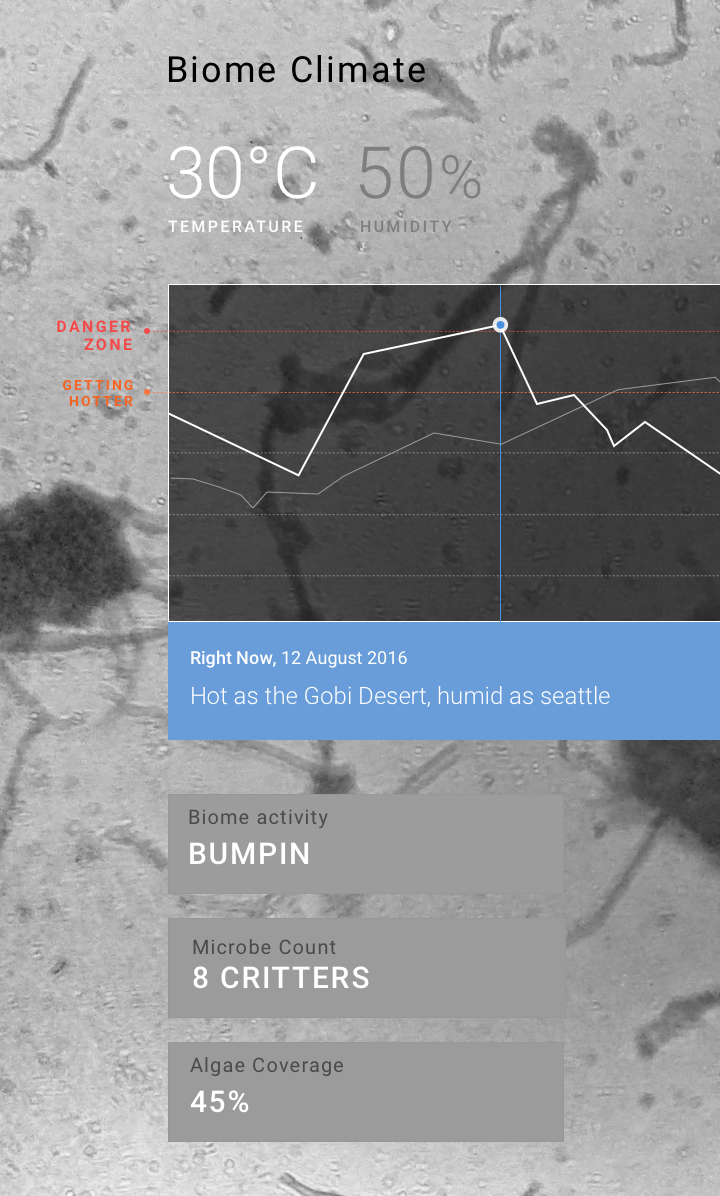

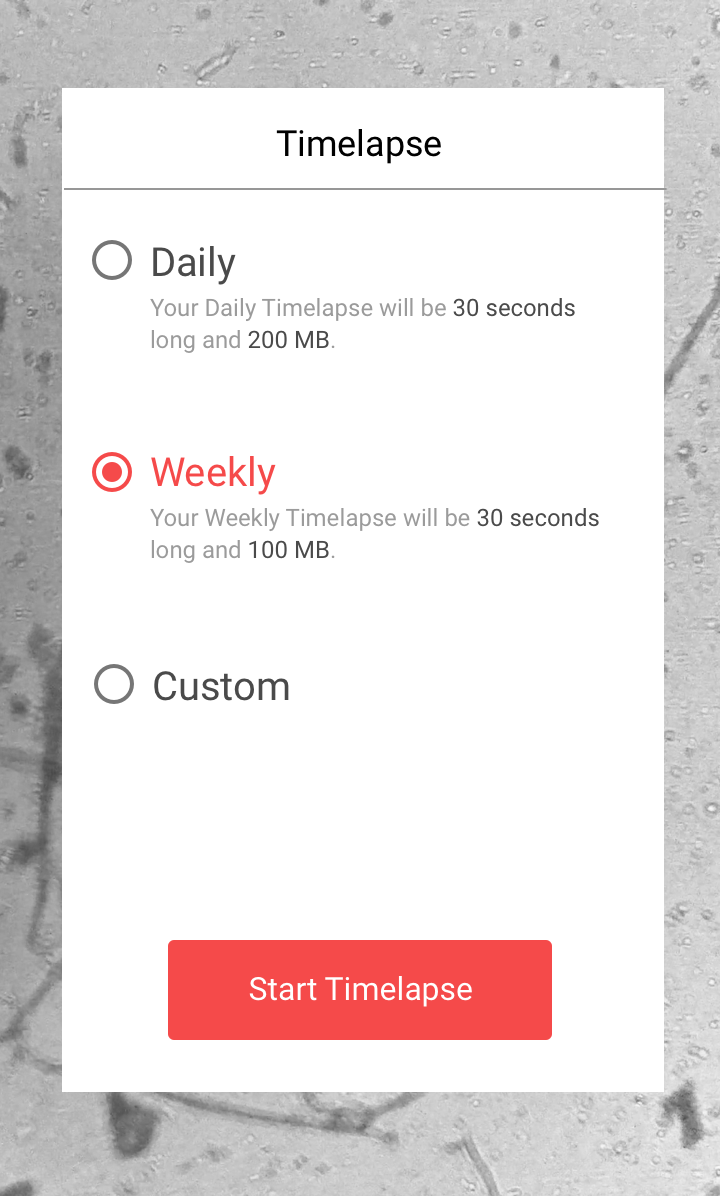

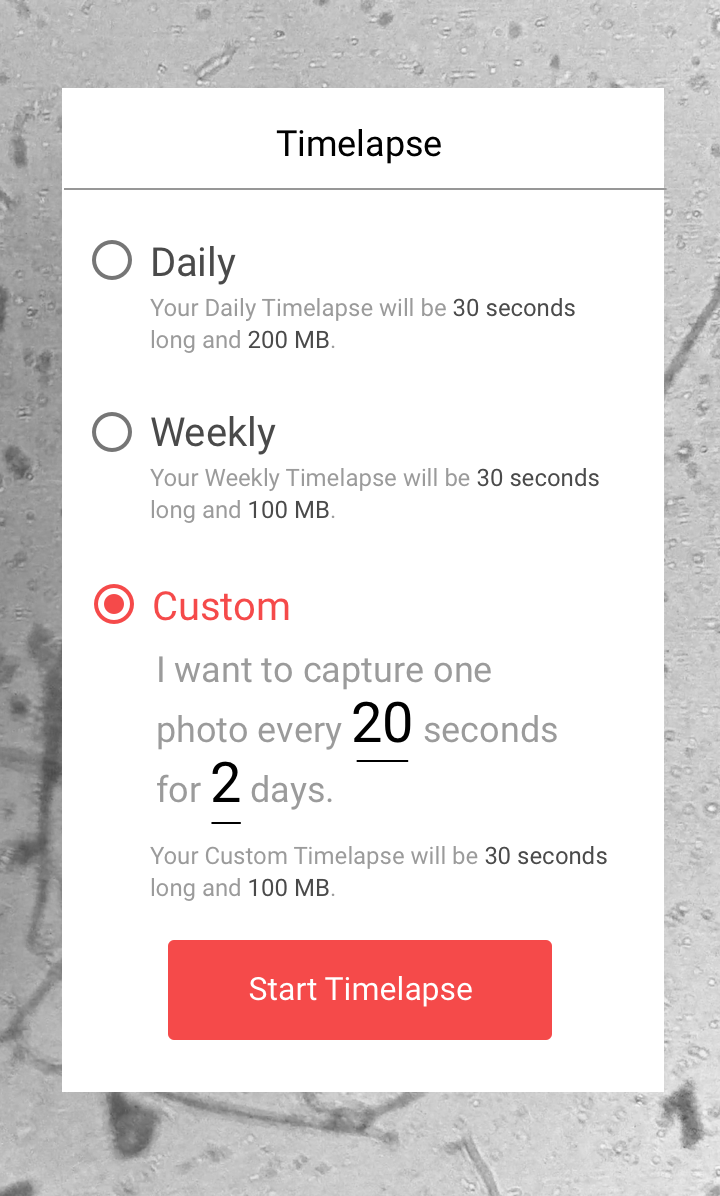

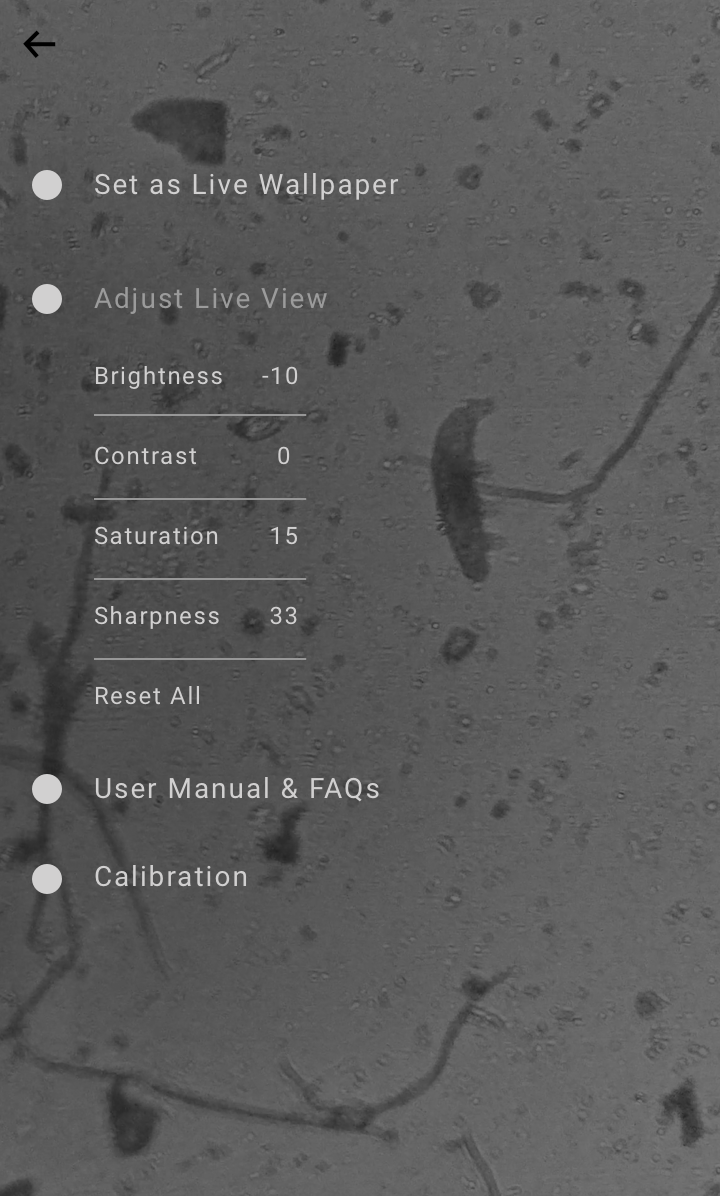

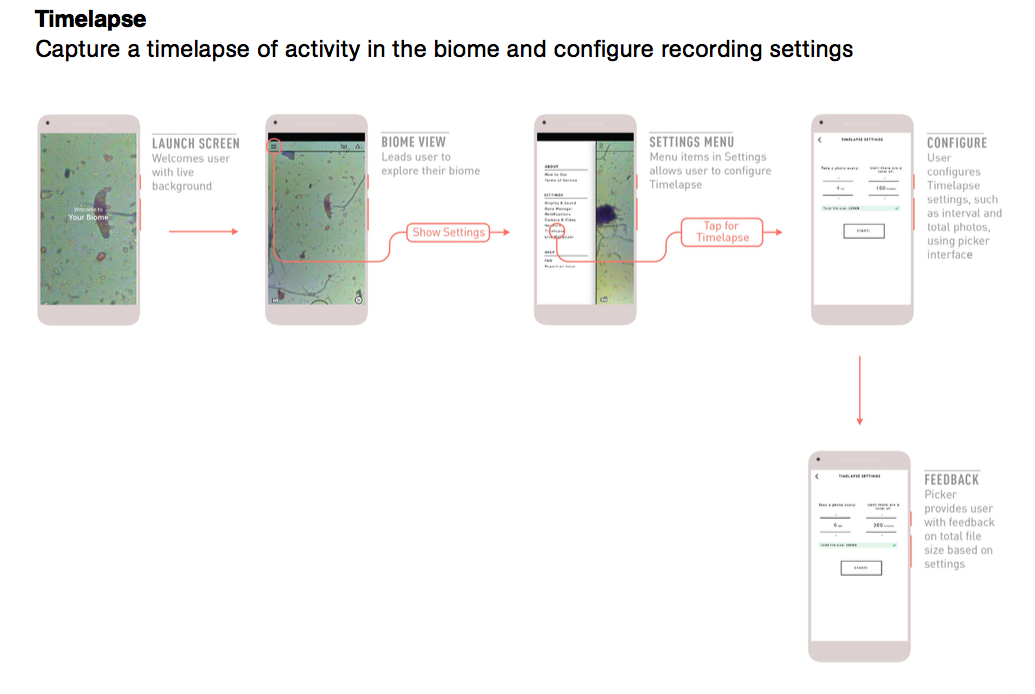

In parallel, we were quickly coming up to speed on tardigrade species diversity and husbandry practices, learning a great deal about how to select and populate small, self-stabilizing biosystems. For this track, we developed some useful timelapse and sensor-logging systems that allowed us to run many concurrent long-term experiments at once, while keeping up-to-the minute video and sensor data available for review. One fun derivative of this software work is a side project I shared out awhile after Ara wrapped up.

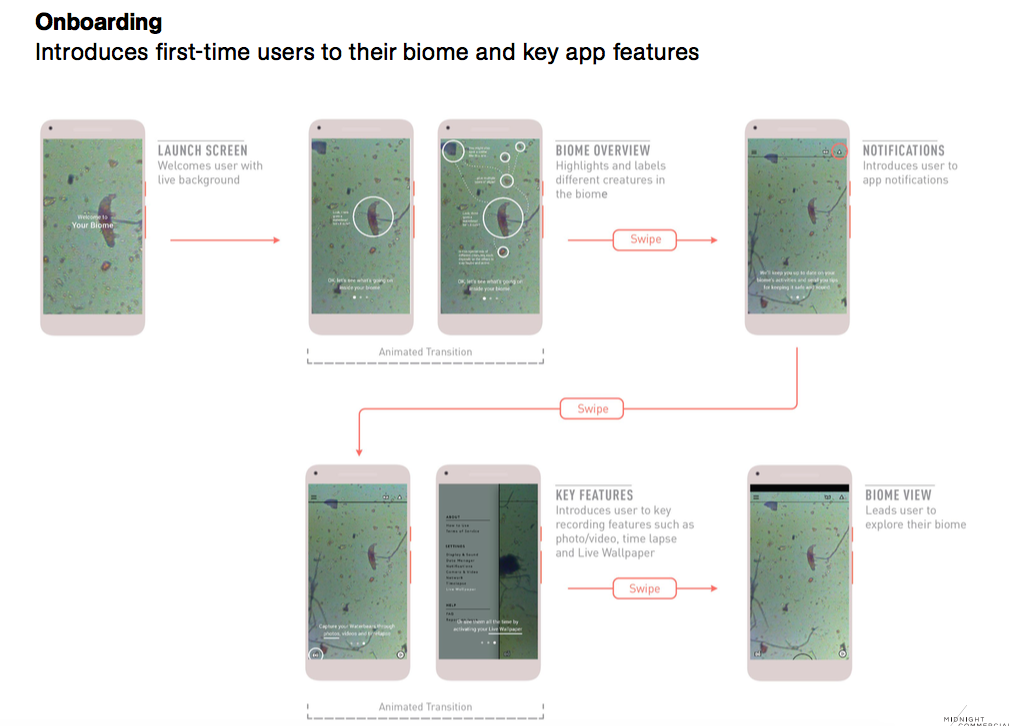

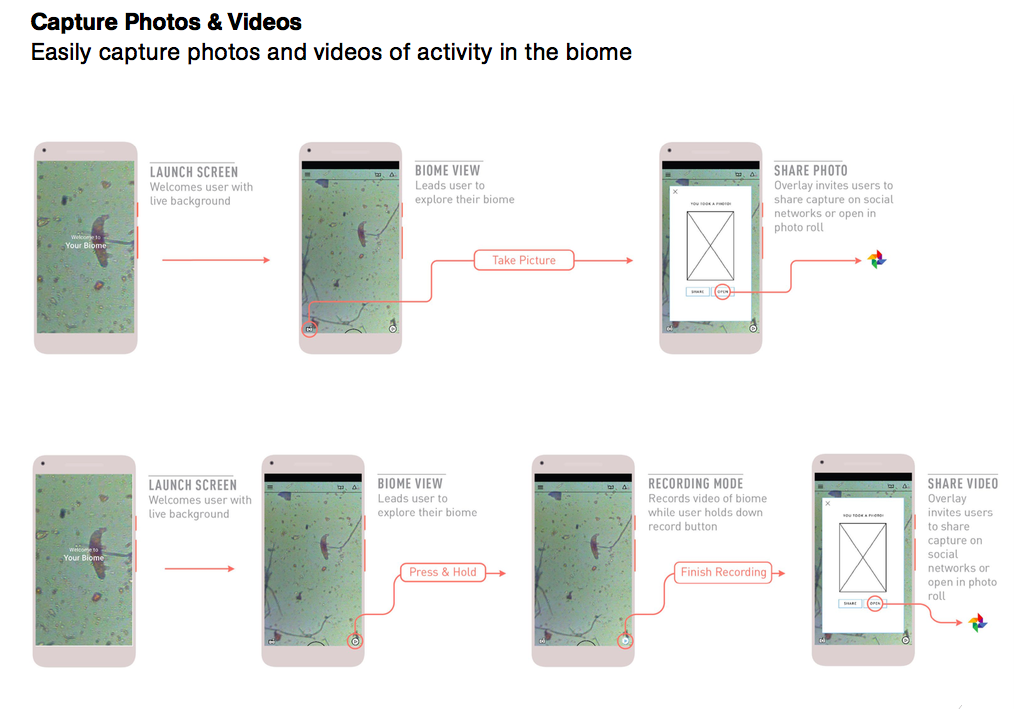

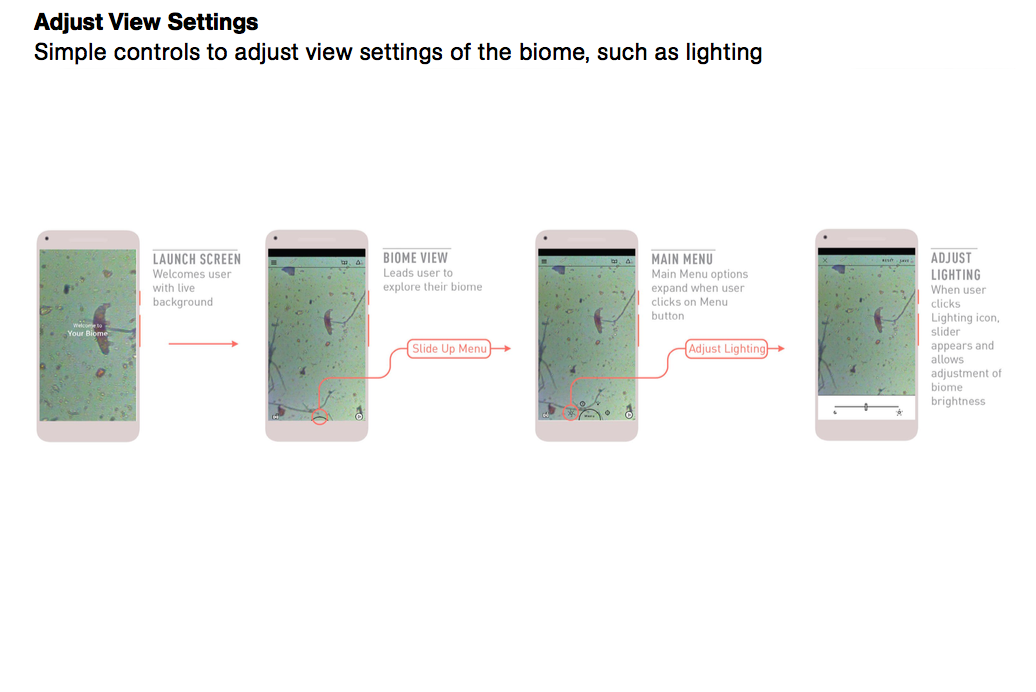

Module Experience

Above all, the module experience was designed around fostering a contemplative, empathetic relationship with the living creatures inside your phone. In all our systems and design decisions, we tried to make sure that our presentation and messaging would speak to the customer’s sense of hospitality—of sharing a weird, digital and physical space with these alien creatures.

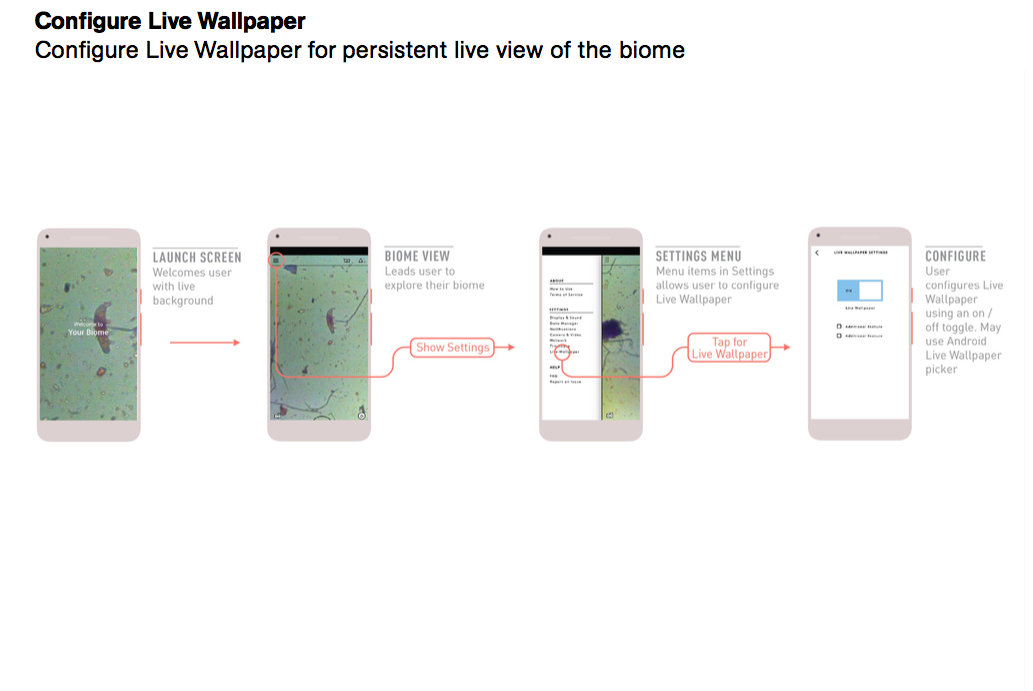

One of my favorite features of the app experience was our use of Android’s Live Wallpaper feature. With this feature, your phone’s background was a short video clip from your microbiome, updated every hour. I lived with this feature for a few weeks (the wallpaper was getting video remotely, from one of our tardigrade incubation systems I’d hooked up to a camera), and it was truly magical the way you could feel the relationship between you and your phone, and of course between you and the tardigrades, becoming richer and more complex.

Press: