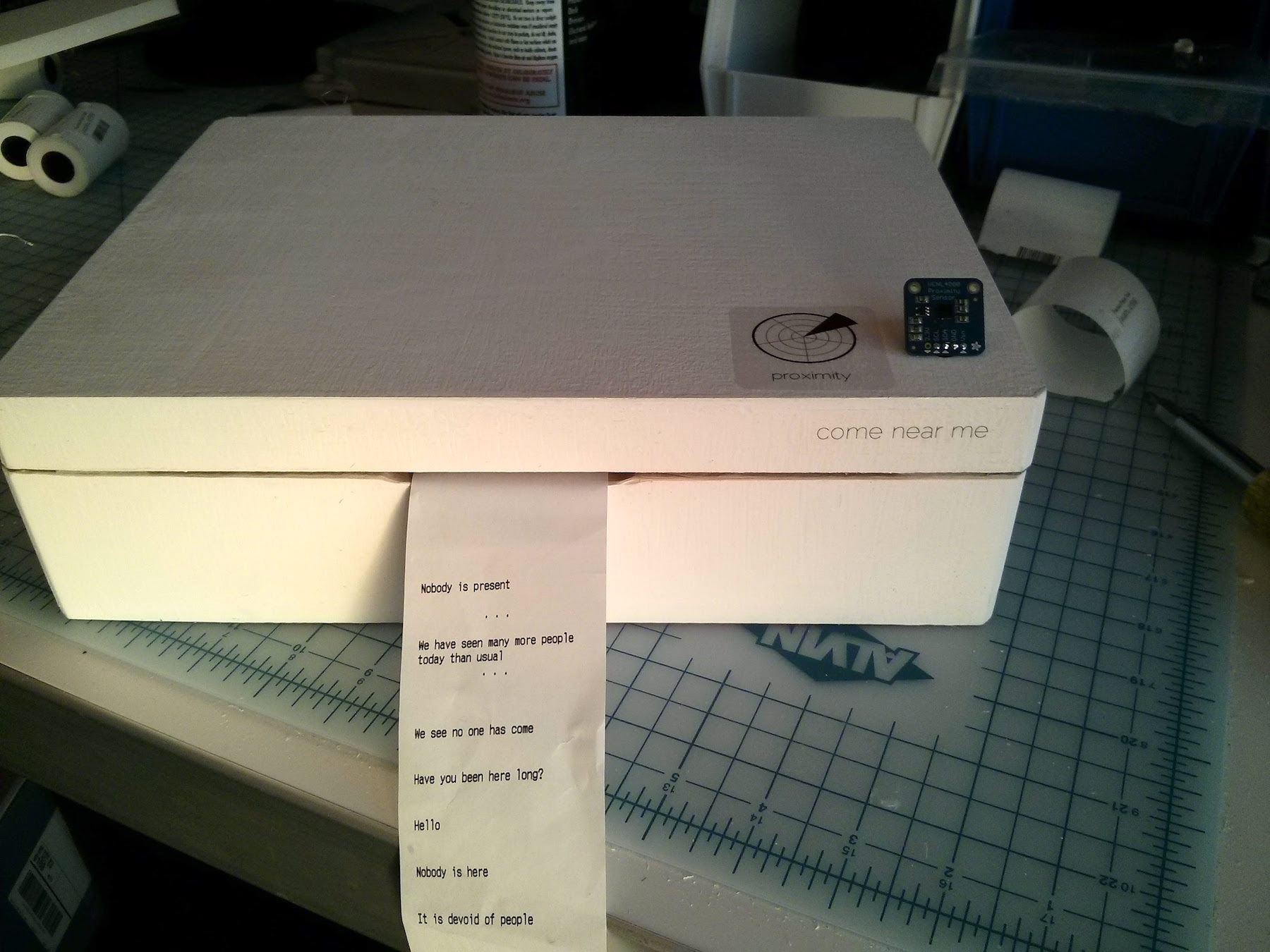

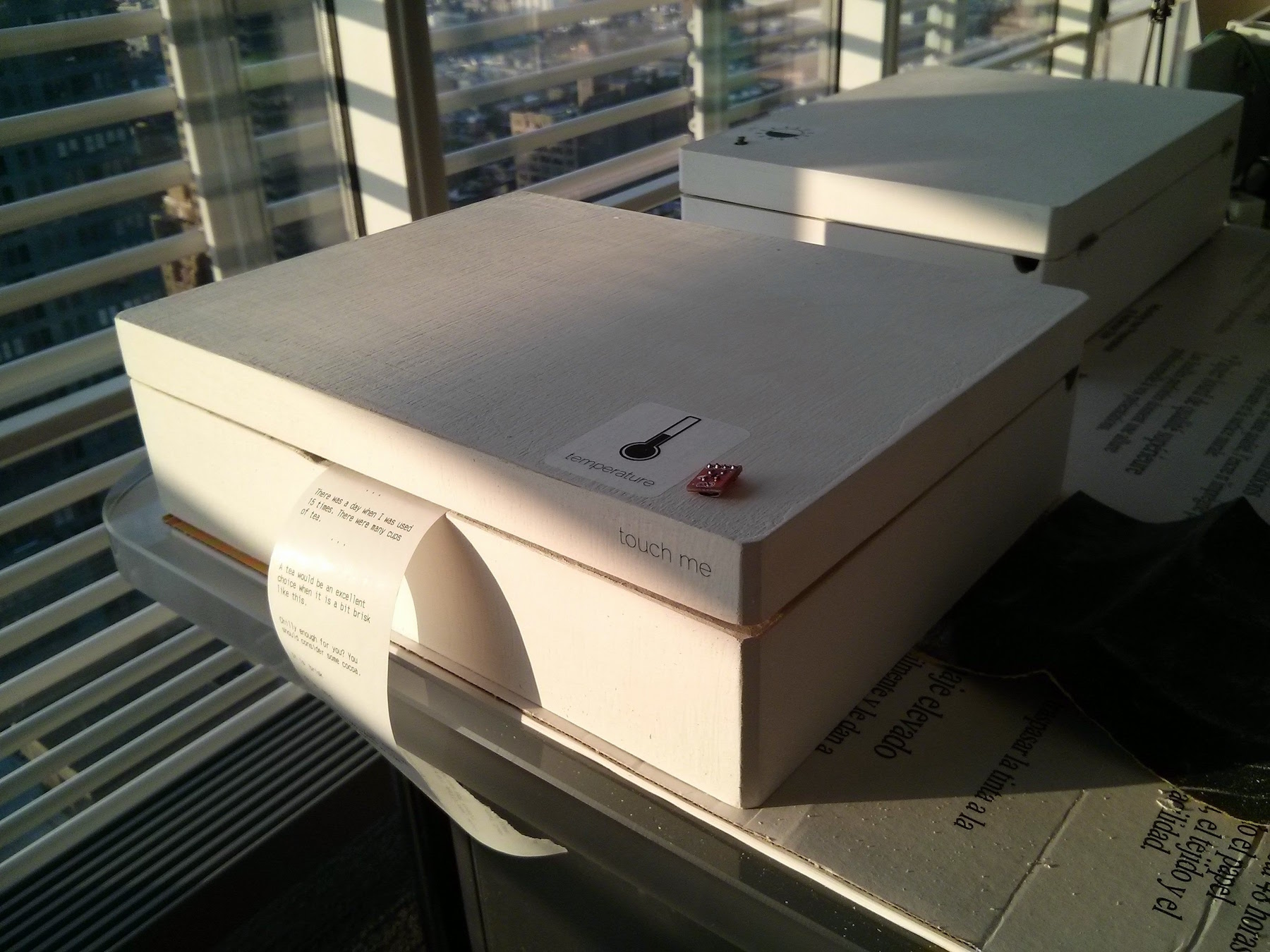

Object Record is an experimental “semantic listener” — it transposes a single sensor’s stimulus into language.

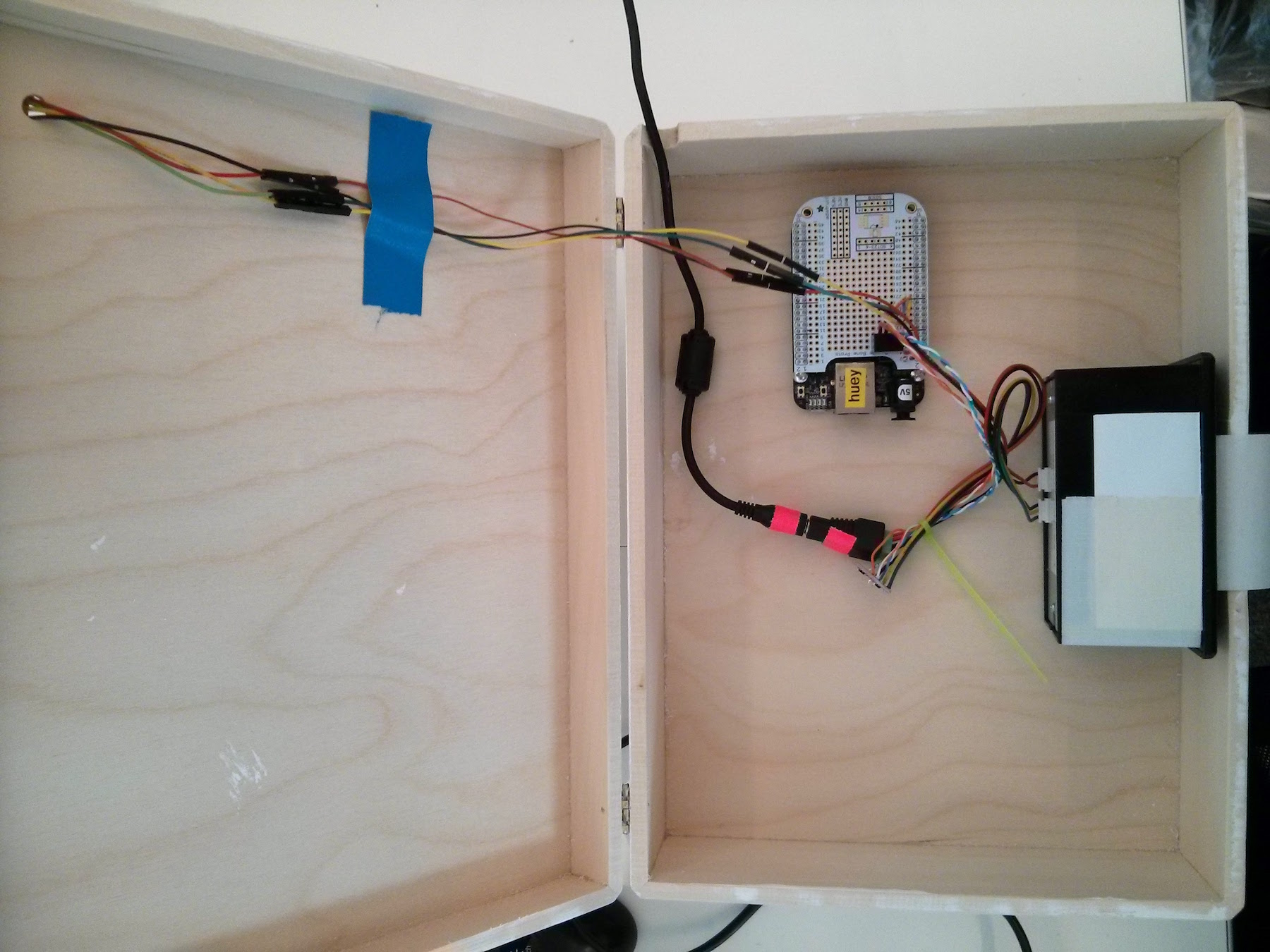

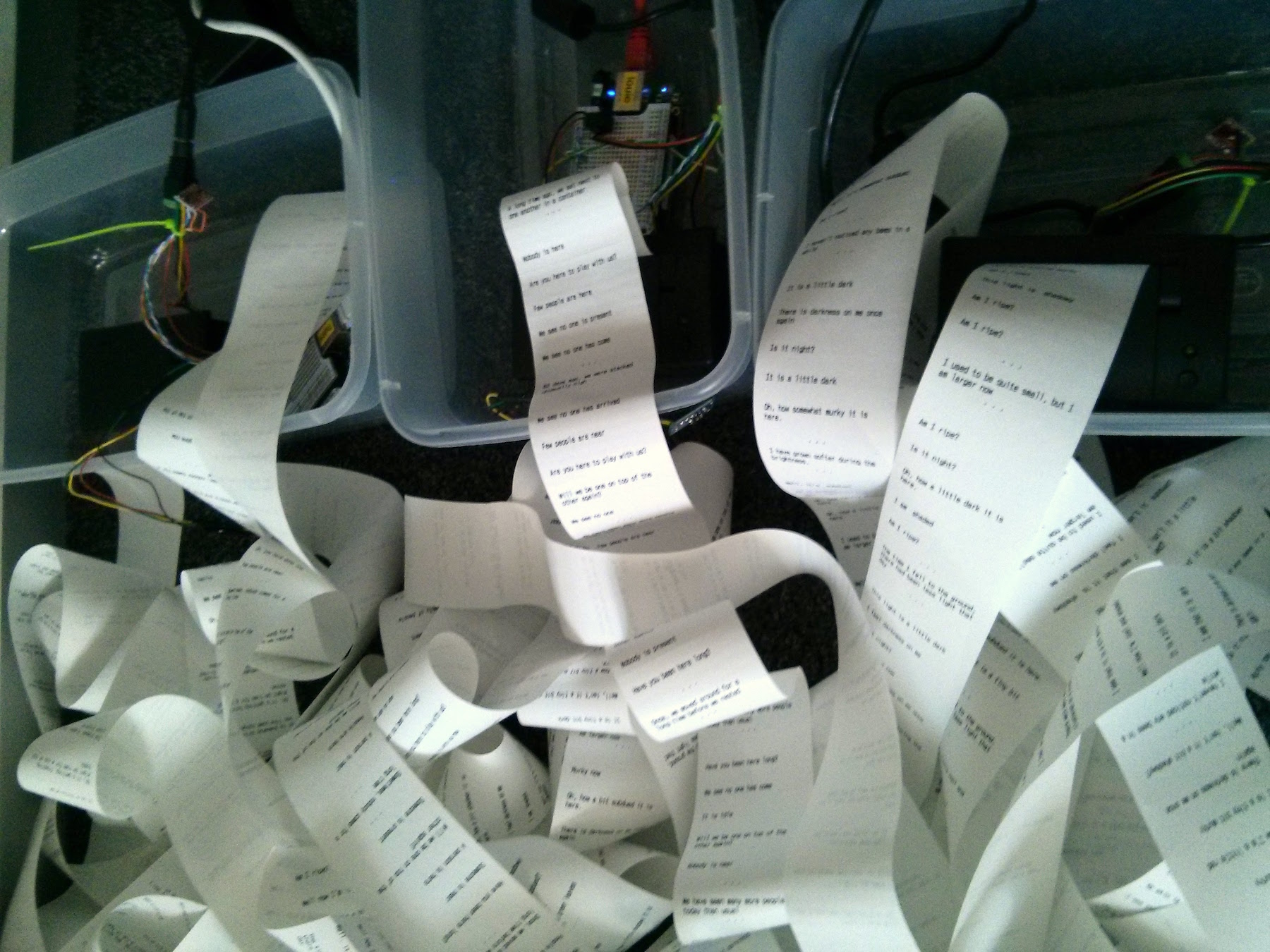

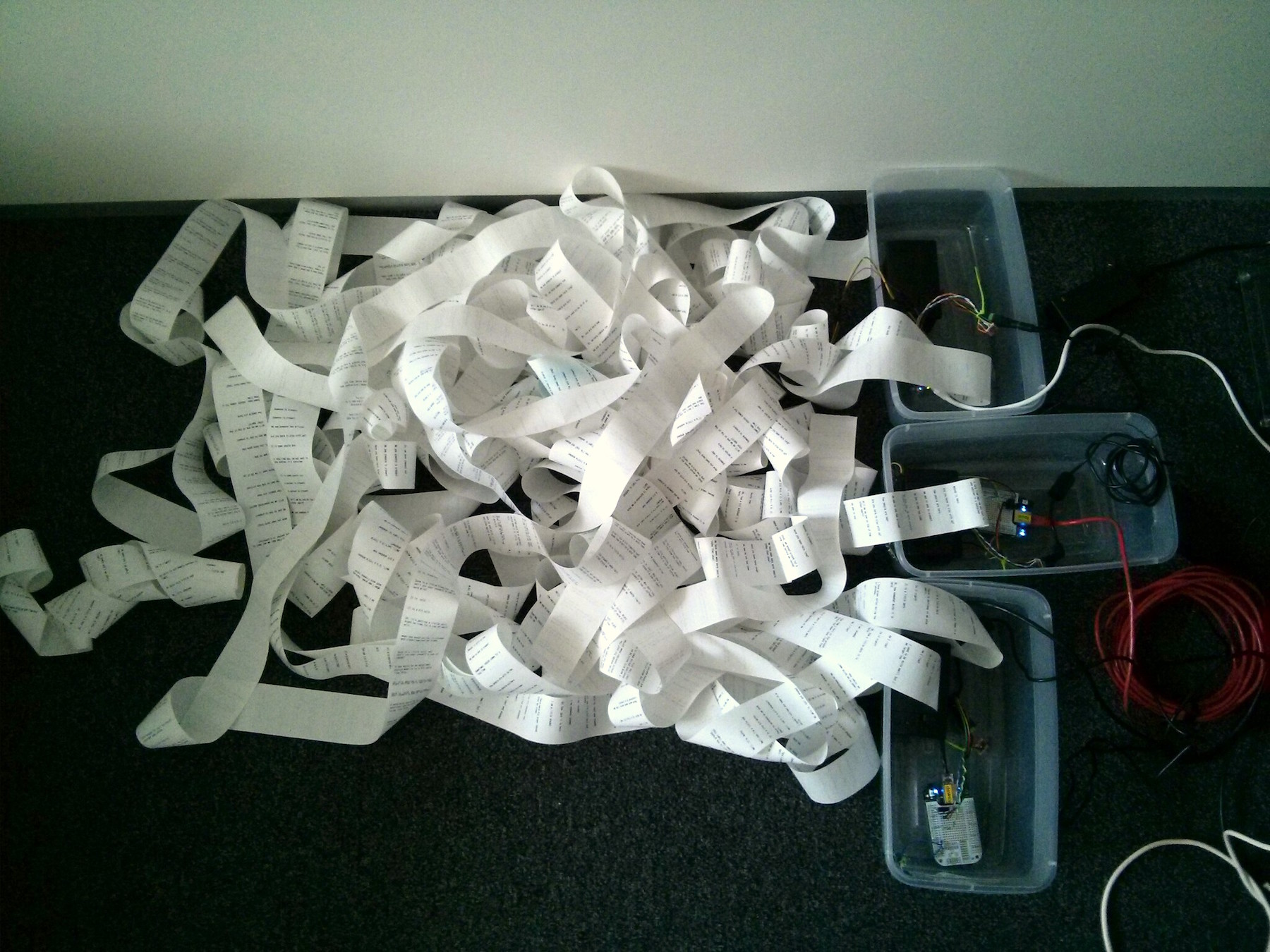

Each of three gallery-white boxes displays a single sensor on top - heat, proximity, and light. An embedded computer constantly evaluates the sensor and its local temporal context. This information is fed into an utterance model - when a substantial change has occurred, or a period of substantial stability, the system generates an English-language utterance and prints it out on a thermal printer. As the installation goes on, this trail of machinic utterances accumulates in front of the device.

The project was shown in the gallery at the Future of Storytelling conference. I worked with a colleague to develop the conference’s brief into a buildable concept. Once we had determined that a prose-transducer was the focus, I was responsible for the interaction design, embedded systems integration, and the development of the language-generation software. Initially, I wrote a markov model trained on the works of P.G. Wodehouse, but before deployment a more simplistic mad-libs style approach was implemented.

The project was a careful study in toeing the line between the narrativization of an inherently mechanical systems input, and a retreat to the pathetic fallacy. You can see all the code and attribution here.